Spring Batch MultiResourceItemReader example

In this tutorial, we will show you how to read items from multiple resources (multiple csv files), and write the items into a single csv file.

Tools and libraries used

- Maven 3

- Eclipse 4.2

- JDK 1.6

- Spring Core 3.2.2.RELEASE

- Spring Batch 2.2.0.RELEASE

P.S This example – 3 CSV files (reader) – combine into a single CSV file (writer).

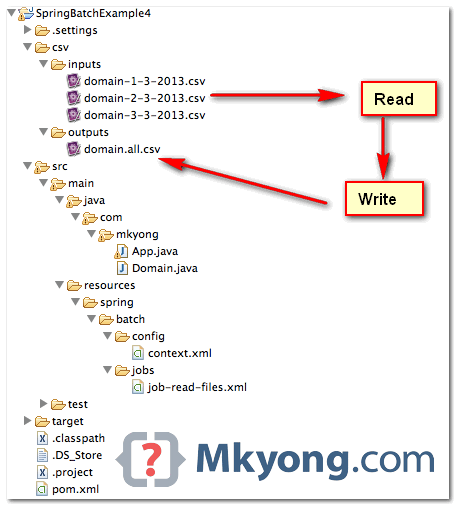

1. Project Directory Structure

Review the final project structure, a standard Maven project.

2. Multiple CSV Files

There are 3 csv files, later we will use MultiResourceItemReader to read it one by one.

csv/inputs/domain-1-3-2013.csv

1,facebook.com

2,yahoo.com

3,google.com

csv/inputs/domain-2-3-2013.csv

200,mkyong.com

300,stackoverflow.com

400,oracle.com

csv/inputs/domain-3-3-2013.csv

999,eclipse.org

888,baidu.com

3. Spring Batch Jobs

A job to read resources that matches this pattern csv/inputs/domain-*.csv, and write it into a single cvs file domain.all.csv.

resources/spring/batch/jobs/job-read-files.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:batch="http://www.springframework.org/schema/batch"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.2.xsd

http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.2.xsd

">

<import resource="../config/context.xml" />

<bean id="domain" class="com.mkyong.Domain" />

<job id="readMultiFileJob" xmlns="http://www.springframework.org/schema/batch">

<step id="step1">

<tasklet>

<chunk reader="multiResourceReader" writer="flatFileItemWriter"

commit-interval="1" />

</tasklet>

</step>

</job>

<bean id="multiResourceReader"

class=" org.springframework.batch.item.file.MultiResourceItemReader">

<property name="resources" value="file:csv/inputs/domain-*.csv" />

<property name="delegate" ref="flatFileItemReader" />

</bean>

<bean id="flatFileItemReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<property name="lineMapper">

<bean class="org.springframework.batch.item.file.mapping.DefaultLineMapper">

<property name="lineTokenizer">

<bean

class="org.springframework.batch.item.file.transform.DelimitedLineTokenizer">

<property name="names" value="id, domain" />

</bean>

</property>

<property name="fieldSetMapper">

<bean

class="org.springframework.batch.item.file.mapping.BeanWrapperFieldSetMapper">

<property name="prototypeBeanName" value="domain" />

</bean>

</property>

</bean>

</property>

</bean>

<bean id="flatFileItemWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<property name="resource" value="file:csv/outputs/domain.all.csv" />

<property name="appendAllowed" value="true" />

<property name="lineAggregator">

<bean

class="org.springframework.batch.item.file.transform.DelimitedLineAggregator">

<property name="delimiter" value="," />

<property name="fieldExtractor">

<bean

class="org.springframework.batch.item.file.transform.BeanWrapperFieldExtractor">

<property name="names" value="id, domain" />

</bean>

</property>

</bean>

</property>

</bean>

</beans>

resources/spring/batch/config/context.xml

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="

http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.2.xsd">

<!-- stored job-meta in memory -->

<bean id="jobRepository"

class="org.springframework.batch.core.repository.support.MapJobRepositoryFactoryBean">

<property name="transactionManager" ref="transactionManager" />

</bean>

<bean id="transactionManager"

class="org.springframework.batch.support.transaction.ResourcelessTransactionManager" />

<bean id="jobLauncher"

class="org.springframework.batch.core.launch.support.SimpleJobLauncher">

<property name="jobRepository" ref="jobRepository" />

</bean>

</beans>

4. Run It

Create a Java class and run the batch job.

App.java

package com.mkyong;

import org.springframework.batch.core.Job;

import org.springframework.batch.core.JobExecution;

import org.springframework.batch.core.JobParameters;

import org.springframework.batch.core.launch.JobLauncher;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

public class App {

public static void main(String[] args) {

App obj = new App();

obj.run();

}

private void run() {

String[] springConfig = { "spring/batch/jobs/job-read-files.xml" };

ApplicationContext context = new ClassPathXmlApplicationContext(springConfig);

JobLauncher jobLauncher = (JobLauncher) context.getBean("jobLauncher");

Job job = (Job) context.getBean("readMultiFileJob");

try {

JobExecution execution = jobLauncher.run(job, new JobParameters());

System.out.println("Exit Status : " + execution.getStatus());

} catch (Exception e) {

e.printStackTrace();

}

System.out.println("Done");

}

}

Output. The content of three csv files is read and combine into a single csv file.

csv/outputs/domain.all.csv

1,facebook.com

2,yahoo.com

3,google.coms

200,mkyong.com

300,stackoverflow.com

400,oracle.com

999,eclipse.org

888,baidu.com

Download Source Code

Download it – SpringBatch-MultiResourceItemReader-Example.zip (12 kb)

Can you also provide a sample working with resourcesaware. I need to do something while looping on a folder. In folder I havev multiple files which needs after each read do something

Just one note:

If you’re files are not inside the folder at the beginning of the batch, the framework will set an empty array into the resources. For example in a “real life” batch you copy your *.csv files from a FTP folder into a local folder and then start to process them with the FlatFileItemWriter. So the framework needs to set the resource property exactly after the copy mechanism and not at the beginning of the spring batch.

Solution:

Set the scope=”step” parameter inside the MultiResourceItemReader bean tag. Then the spring batch framework will look up the content of the folder when it reach this step.

when I set the scope = step then I get Caused by: java.lang.IllegalArgumentException: Input resource must be set

any Idea?

Tks for help

Hi-

Your site is an excellent resource for solving problems. Is it possible to use a regular expression such as ^[0-9]{14}(.txt) for the resources property?

Thanks in advance,

Hi,

How to read data from three restEndpoints and write in a single file.

Hi,

I need to read two different value CSV files and insert them to two different tables in DB simultaniously. I have tried with a single file it works fine for me using spring batch. But, When I use two files.. It throws invalid number of tokens exception. Please let me know how to achieve this using spring batch since I am new to spring and spring batch.

Thanks in advance.

Regards,

Prabhu

Hi mkyong , I have to read two files in a job , As i require the them in my code . So can i read multiple files in one job , but maintain seperate objects . Is it possible

Hi mkyong I found an issue in your example that can create several confusion and spent long time in solve it, when you define the Domain bean it must be defined as prototype because if not then the singleton domain will be shared in all item instances:

Example in your case:

If you define an interval of 4 (commit-interval=”4″) the output file will be:

200,mkyong.com

200,mkyong.com

200,mkyong.com

200,mkyong.com

888,baidu.com

888,baidu.com

888,baidu.com

888,baidu.com

Then the output data is not valid to fix it you need to:

To avoid share the same instance and get the correct values: generating an expected output:

1,facebook.com

2,yahoo.com

3,google.com

200,mkyong.com

300,stackoverflow.com

400,oracle.com

999,eclipse.org

888,baidu.com

If the job in above example is run second time, even with csv files names different than used in the first job run, it will throw an JobInstanceAlreadyCompleteException.

Please add an incrementer to you Job, like ” .incrementer(new RunIdIncrementer())”.

Is there a way to provide different delimeters for different files

Hi Mkyong,

Can you help me to read files from ftp folder?

I can read an file from ftp, but i need read all files from folder.

Hi Minh, you can use org.apache.commons.net.ftp.FTPClient or com.jcraft.jsch.ChannelSftp for accessing FTP or SFTP folders.

Hi,

I need to read multiple files, process them and write them to respective response files. Please let me know how to achive this using spring batch.

Thanks in advance.

Santhosh

As I create disinter files with different names in batch obtaining information from the database? I mean ..

Consult the database, obtaining a list of records.

Discriminate the information according to a field, for example, country …

country and I have to create the corresponding file with the information

Hi mkyong ,

I have two different jobs running in same application ,Is it possible to have the jwo jobs share the same jobrepository and same datasource in turn.

What is the recomended pratise?